Accessible Re-design of MaxDiff Survey

Role: UX Research Intern at Google (Products for All)

Methods: Survey, accessibility testing, experimental design, mixed-method evaluation

Impact: Findings adopted into Google’s company-wide UXR handbook

Overview

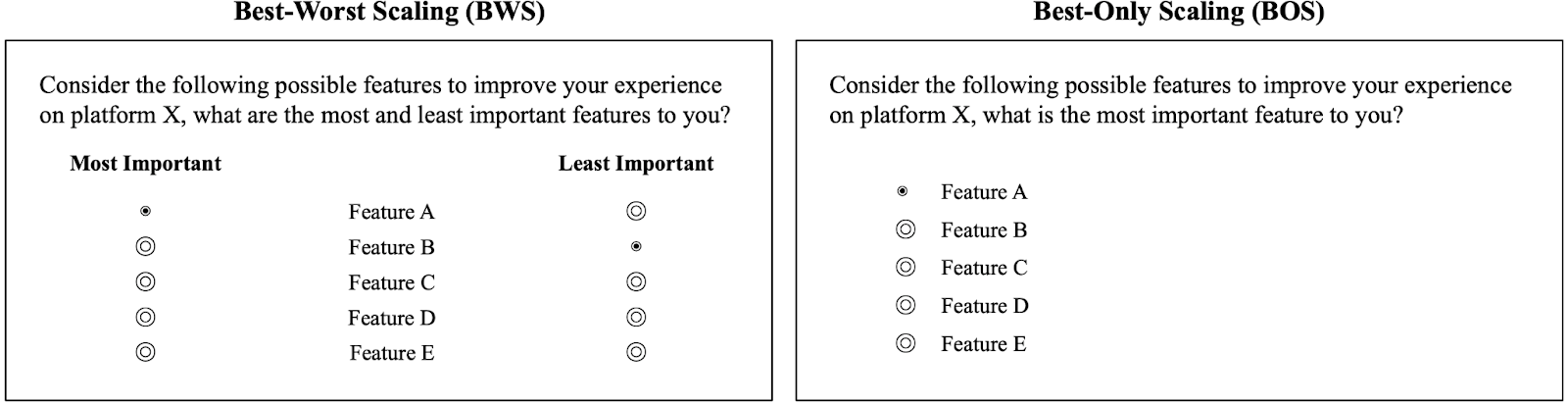

UX researchers often rely on MaxDiff surveys to prioritize product features. While statistically powerful, this method creates significant accessibility barriers for blind and low-vision (BLV) users. During my internship, I researched the plausbility of adapting and simplifying traditional MaxDiff to reduce accessibility challenges while still producing rigorous, actionable insights. By combining qualitative usability study sessions, cognitive interviews, a large-scale survey experiment, I demonstrated that a simplified MaxDiff version - best-only MaxDiff - can both improve accessibility and maintain the methodological rigor product teams rely on.

The Challenge

- For users: Traditional MaxDiff requires selecting both the “best” and “worst” items in a matrix format. This layout is cognitively taxing and difficult to navigate with screen-readers / magnifiers, leading to confusion, frustration, and incomplete responses.

- For teams: Inaccessible surveys exclude key user groups, lowering data quality and leaving product decisions less representative. Researchers needed a method that maintained rigor and worked for diverse participants.

My Approach

I designed a three-part research agenda that evaluate BOS in real-world conditions and also helps an anonymous product team to better understand preferences of its disability user base. Each phase was chosen to balance depth, scalability, and methodological rigor:

- Qualitative Pre-Test (BLV Users):

- Why: We started small (8 participants) to capture rich, firsthand feedback before scaling up. This ensured we understood accessibility pain points and usability improvements early.

- How: 90-minute qualitative, cognitive interview sessions comparing BOS vs BWS, capturing both navigation challenges and clarity of instructions.

- Large-Scale Survey (BLV Users):

- Why: To validate BOS’s performance under typical industry conditions. MaxDiff is valued for its statistical precision at scale; we needed to see if BOS could replicate that.

- How: Recruited 535 BLV respondents to complete BOS on 30 product features. Measured both experience (accessibility, mental demand, focus) and preference rankings.

- Survey Experiment (DHH Users):

- Why: We wanted to benchmark BOS against BWS with a user group that could access both survey versions.

- How: Random assignment to BOS vs BWS conditions. Splitting DHH respondents between BOS and BWS let us compare rankings head-to-head. Descrpitive and correlation analysis of resulting rankings.

Key Findings

- Accessibility: All BLV pre-testers considered BOS more accessible. Screen-reader users called BWS “frustrating,” while BOS was “straightforward” and “less overwhelming.”

- Clarity: Participants reported BOS required less effort and reduced cognitive load - an important factor for survey engagement.

- Validation at Scale: With 535 BLV respondents, BOS produced clear top feature rankings with non-overlapping confidence intervals, showing that simplified input still yielded actionable results.

- Experimental Evidence: Among DHH respondents, BOS and BWS rankings were highly correlated, reinforcing BOS’s capability to deliver results comparable to MaxDiff.

- Broader Potential: Because BOS reduces complexity while maintaining rigor, it could also benefit people with cognitive disabilities or teams working under tight timelines and limited resources.

Impact

- For product team PMs: Findings informed feature prioritization discussions and demonstrated that inclusivity does not require sacrificing rigor.

- For Google UXR: BOS was formally documented in the company-wide UXR handbook for accessibility-sensitive surveys.

- For the field: Provided one of the first large-scale validations of an accessible MaxDiff alternative, sparking discussions about inclusive methodology.

Reflection

Through this project, I learned how to:- Evaluate new methods with both qualitative depth and quantitative scale.

- Make intentional design trade-offs (rigor vs accessibility, precision vs inclusivity).

- Communicate findings in a way that resonates with both researchers and PMs - showing not just what we found, but why the approach matters.